Advanced NIC Kernel setting

For low latency and less back and forth, several net.ipv4 settings can help optimize the TCP connections. Here are some settings you can tweak:

net.ipv4.tcp_low_latency: Set to1to optimize the kernel's TCP stack for low latency rather than throughput. This setting prioritizes low latency over maximizing bandwidth.net.ipv4.tcp_syn_retries: Reduce the number of SYN retries, which can help reduce the time spent on unsuccessful connection attempts. Lower values will result in fewer retries before giving up on a connection, but be cautious not to set it too low, as it could cause legitimate connection attempts to fail.net.ipv4.tcp_synack_retries: Similar totcp_syn_retries, this setting controls the number of SYN-ACK retries. Reducing this value can help reduce the time spent on unsuccessful connection attempts.net.ipv4.tcp_fin_timeout: Reducing this value will decrease the time the kernel waits for a FIN-ACK segment before aborting the connection. This can help release resources faster when connections are closed, but setting it too low might cause issues with slow or congested networks.net.ipv4.tcp_keepalive_time,net.ipv4.tcp_keepalive_probes, andnet.ipv4.tcp_keepalive_intvl: Adjusting these keepalive settings can help detect unresponsive connections faster, which can help reduce back and forth in case of connection issues.

It's important to note that these settings can have significant effects on your system's networking behavior, and the optimal values will vary depending on your specific use case and network conditions. When adjusting these settings, be sure to monitor your system's performance and network latency to ensure the changes have the desired effect and don't negatively impact system stability or performance.

Additionally, consider the following non-net.ipv4 factors that can also help improve low latency and reduce back and forth:

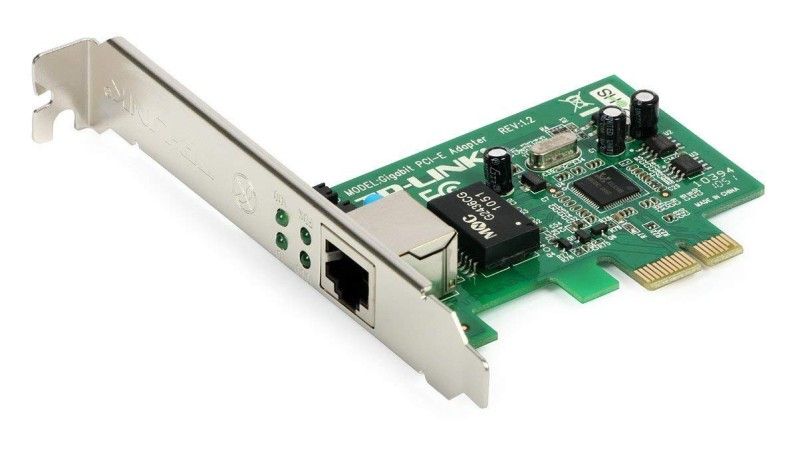

- Use a high-performance network interface card (NIC) with hardware offloading capabilities.

- Choose a low-latency congestion control algorithm, such as BBR or DCTCP, if available on your system. This can be set via the

net.ipv4.tcp_congestion_controlparameter. - Optimize your network infrastructure, including switches, routers, and cabling, to minimize latency.

- Reduce the distance between your system and the remote server, if possible. Network latency increases with distance, so minimizing the physical distance can help in reducing latency.

- Use a Content Delivery Network (CDN) or edge computing solutions to bring content closer to users, reducing latency.

Remember that network optimization is a complex task, and it's essential to consider all aspects of your system and network infrastructure when working to minimize latency and back and forth communication.

Here are some example sysctl settings to optimize for low latency and reduce back and forth communication. Keep in mind that these settings may not be universally optimal, and you should carefully monitor your system's performance after applying them to ensure they have the desired effect without causing any issues.

# Optimize for low latency

net.ipv4.tcp_low_latency = 1

# Reduce SYN retries

net.ipv4.tcp_syn_retries = 4

# Reduce SYN-ACK retries

net.ipv4.tcp_synack_retries = 3

# Decrease FIN timeout

net.ipv4.tcp_fin_timeout = 20

# Adjust keepalive settings

net.ipv4.tcp_keepalive_time = 600

net.ipv4.tcp_keepalive_probes = 5

net.ipv4.tcp_keepalive_intvl = 15

# Use a low-latency congestion control algorithm (e.g., BBR)

net.ipv4.tcp_congestion_control = bbr

To apply these settings, create a new file named low_latency.conf in the /etc/sysctl.d/ directory, and copy the settings above into the file. Then, run the following command to apply the settings:

sudo sysctl --system

Again, keep in mind that these settings are just examples, and the optimal values will depend on your specific use case and network conditions. Always monitor your system's performance and network latency after applying any changes to ensure they have the desired effect and don't negatively impact system stability or performance.

For low latency and audio streaming, BBR (Bottleneck Bandwidth and Round-trip propagation time) is likely the best choice among the three congestion control algorithms you've mentioned. Here's a brief overview of each algorithm and why BBR is a good fit for your use case:

BBR: BBR is a modern congestion control algorithm developed by Google that aims to maximize bandwidth utilization while minimizing latency. It does this by estimating the available bandwidth and round-trip time (RTT) and adjusting the sending rate accordingly. BBR is designed to work well in various network conditions and is effective at reducing latency, making it a suitable choice for low latency and audio streaming applications.

DCTCP (Data Center TCP): DCTCP is a congestion control algorithm designed specifically for data center networks with very low latency and high-speed links. It is not optimized for general internet traffic and may not perform well in networks with higher latencies or varying network conditions, making it less suitable for audio streaming applications over the public internet.

HTCP (H-TCP): HTCP is a congestion control algorithm designed for high-speed, long-distance networks. It aims to maximize throughput while being fair to other flows sharing the same network resources. However, it doesn't specifically focus on minimizing latency, which can be important for audio streaming applications.

Given these characteristics, BBR is likely the best choice for low latency and audio streaming among the three algorithms. To enable BBR as the default congestion control algorithm on your system, you can set the following sysctl parameter:

net.ipv4.tcp_congestion_control = bbr

Note that BBR is available in Linux kernel versions 4.9 and later. Ensure that your kernel version supports BBR before enabling it. You can check your kernel version by running the command:

uname -r

The net.core parameters in sysctl are related to the core networking settings in the Linux kernel. Some of these settings can be adjusted to improve network performance and potentially benefit audio streaming applications. Here are a few net.core settings that you can consider optimizing:

net.core.rmem_maxandnet.core.wmem_max: These settings control the maximum receive and send socket buffer sizes in bytes, respectively. Increasing these values can help improve network performance for high-bandwidth connections, such as audio streaming:

net.core.rmem_max = 16777216

net.core.wmem_max = 16777216

net.core.rmem_defaultandnet.core.wmem_default: These settings control the default receive and send socket buffer sizes in bytes, respectively. Adjusting these values can have an impact on network performance, but be cautious not to set them too high, as it can consume more memory:

net.core.rmem_default = 262144

net.core.wmem_default = 262144

net.core.optmem_max: This setting determines the maximum amount of option memory that is available to each socket. Increasing this value can help improve performance when using socket options that require additional memory:

net.core.optmem_max = 20480

net.core.netdev_max_backlog: This setting specifies the maximum number of packets in the backlog before the kernel starts dropping them. Increasing this value can help improve network performance under high packet rates:

net.core.netdev_max_backlog = 2000

net.core.somaxconn: This setting controls the maximum number of pending connections in the listen queue for a socket. Increasing this value can help improve performance for applications that have a high number of incoming connections:

net.core.somaxconn = 4096

Remember that the optimal values for these settings will depend on your specific use case, hardware, and network conditions. Monitor your system's performance and network latency after applying any changes to ensure they have the desired effect and don't negatively impact system stability or performance.